hypervisor 简述

Hypervisor 允许多个操作系统共享一个 CPU(多核 CPU 的情况可以是多个 CPU),用以协调多个虚拟机,由于这个原因,Hypervisor 又被认为是虚拟机管理器,简称为 VMM

- 在 x86-system 之上,intel 公司和 AMD 公司分别他们在产品上加入了硬件虚拟化 Intel-VT 和 AMD-V

Hypervisor 有两种类型:

- Type-1:直接运行在 host hardware(主机硬件)上,来控制硬件资源与管理 guest operating system(安装在虚拟机 VM 上面的操作系统 OS)

- Typer-2:直接作为一种计算机程序运行在传统的操作系统上,一个 guest operating system 直接作为 host 上的一个进程运行(QEMU,VMware 都是使用 Typer-2)

Hypervisors 不但协调着这些硬件资源的访问,而且在各个虚拟机之间施加防护,当服务器启动并执行 Hypervisor 时,它会加载所有虚拟机客户端的操作系统同时会分配给每一台虚拟机适量的内存,CPU,网络和磁盘

Hypervisors 在 Linux 中有一个很重要的运用:KVM

hypervisor & KVM

KVM(Kernel-Based Virtual Machine 基于内核的虚拟机)是 Linux 内核的一个可加载模块,是 x86 硬件上 Linux 的内核驻留虚拟化基础架构

- KVM 能够让 Linux 主机成为一个 Hypervisor 虚拟机监控器,需要 x86 架构的支持虚拟化功能的硬件(比如:Intel-VT,AMD-V),是一种全虚拟化架构

KVM 是管理虚拟硬件设备的驱动,该驱动使用字符设备 /dev/kvm 作为管理接口:

1 |

- 系统调用流程:sys_ioctl -> ksys_ioctl -> do_vfs_ioctl -> vfs_ioctl -> unlocked_ioctl -> [kvm_dev_ioctl,kvm_vcpu_ioctl,kvm_vm_ioctl]

相关的 API 如下:

1 |

参考案例如下:

1 | const uint8_t code[] = { |

- 从之前的一道 KVM pwn 上截取的,应该只能参考不能编译

KVM ioctl

在 KVM API 底层对接的内核函数分别是:

kvm_dev_ioctl:处理 kvmfdkvm_vm_ioctl:处理 vmfdkvm_vcpu_ioctl:处理 vcpufd

kvm_dev_ioctl:

1 | static long kvm_dev_ioctl(struct file *filp, |

kvm_vm_ioctl:

1 | static long kvm_vm_ioctl(struct file *filp, |

kvm_vcpu_ioctl:

1 | static long kvm_vcpu_ioctl(struct file *filp, |

这3个函数分别对接3种不同的 KVM 文件描述符(Linux 中一切皆文件),然后在 Switch-case 中对不同的命令进行分类处理

在 KVM 创建虚拟机并执行指令的过程中,最重要的就是如下几个步骤:

1 | int vmfd = ioctl(kvm, KVM_CREATE_VM, (unsigned long)0); /* 创建vm */ |

在分析具体的函数之前,先看一看几个重要的结构体

KVM 相关结构体

创建 vm 时,生成的主要结构体:

1 | struct kvm { |

创建 vcpu 时,生成的主要结构体:

1 | struct kvm_vcpu { |

切换 Context 时,需要使用的结构体:

1 | struct pt_ctx { |

设置特殊寄存器时使用结构体,和设置普通寄存器时使用的结构体:

1 | struct kvm_sregs { |

1 | struct kvm_regs { |

KVM 底层代码分析

创建 vmfd:kvm_dev_ioctl_create_vm

1 | static int kvm_dev_ioctl_create_vm(unsigned long type) |

- 每次访问 MMIO 都会导致虚拟机退到 QEMU 中,但存在多个 MMIO 操作时,可以先将前面的 MMIO 操作保存起来,等到最后一个 MMIO 的时候,再一起退出到 QEMU 中处理,这就是 coalesced MMIO

- 在初始化完

struct kvm后,程序会申请一个文件描述符 FD,将两者绑定之后将 FD 返回

创建 vcpufd:kvm_vm_ioctl_create_vcpu

1 | static int kvm_vm_ioctl_create_vcpu(struct kvm *kvm, u32 id) |

- vcpu 需要一页的空间来存放 kvm_run 的数据,对 vcpu 进行 mmap 所申请到的空间也就是 kvm_run

- 在初始化完

struct kvm_vcpu后,程序会调用create_vcpu_fd以创建 vcpufd,并注册kvm_vcpu_fops操作函数集

启动虚拟机:kvm_arch_vcpu_ioctl_run

1 | int kvm_arch_vcpu_ioctl_run(struct kvm_vcpu *vcpu) |

- vcpu 最终是要放置在物理 CPU 上执行的,很显然,我们需要进行 Context 的切换:

- 保存好 Host 的 Context,并切换到 Guest 的 Context 去执行,最终在退出时再恢复回 Host 的 Context

- 运行虚拟机的核心函数为

vcpu_run,其源码如下:

1 | static int vcpu_run(struct kvm_vcpu *vcpu) |

- 运行 vcpu 的核心函数

vcpu_enter_guest如下:

1 | static int vcpu_enter_guest(struct kvm_vcpu *vcpu) |

- 函数

kvm_x86_ops.run中完成了 Context 的保存和切换过程,其对应的函数就是vmx_vcpu_run:

1 | static struct kvm_x86_ops vmx_x86_ops __initdata = { |

- 在

vmx_vcpu_run中,最终会调用pt_load_msr和pt_save_msr实现 Context 的切换:

1 | static inline void pt_load_msr(struct pt_ctx *ctx, u32 addr_range) |

1 | static inline void pt_save_msr(struct pt_ctx *ctx, u32 addr_range) |

hypervisor & Qemu

QEMU:一个完整的可以单独运行的软件,可以独立模拟出整台计算机,包括 CPU,内存,IO 设备,通过一个特殊的“重编译器”对特定的处理器的二进制代码进行翻译,从而具有了跨平台的通用性

- 在硬件支持虚拟化之前,Qemu 纯软件虚拟化方案,是通过

tcg(tiny code generator)的方式来进行指令翻译,翻译成 Host 处理器架构的指令来执行 - 当 hypervisor 技术出现之后,利用 KVM 技术把 Qemu 模拟 CPU、内存的代码换成 KVM,而网卡、显示器等留着,因此 QEMU+KVM 就成了一个完整的虚拟化平台

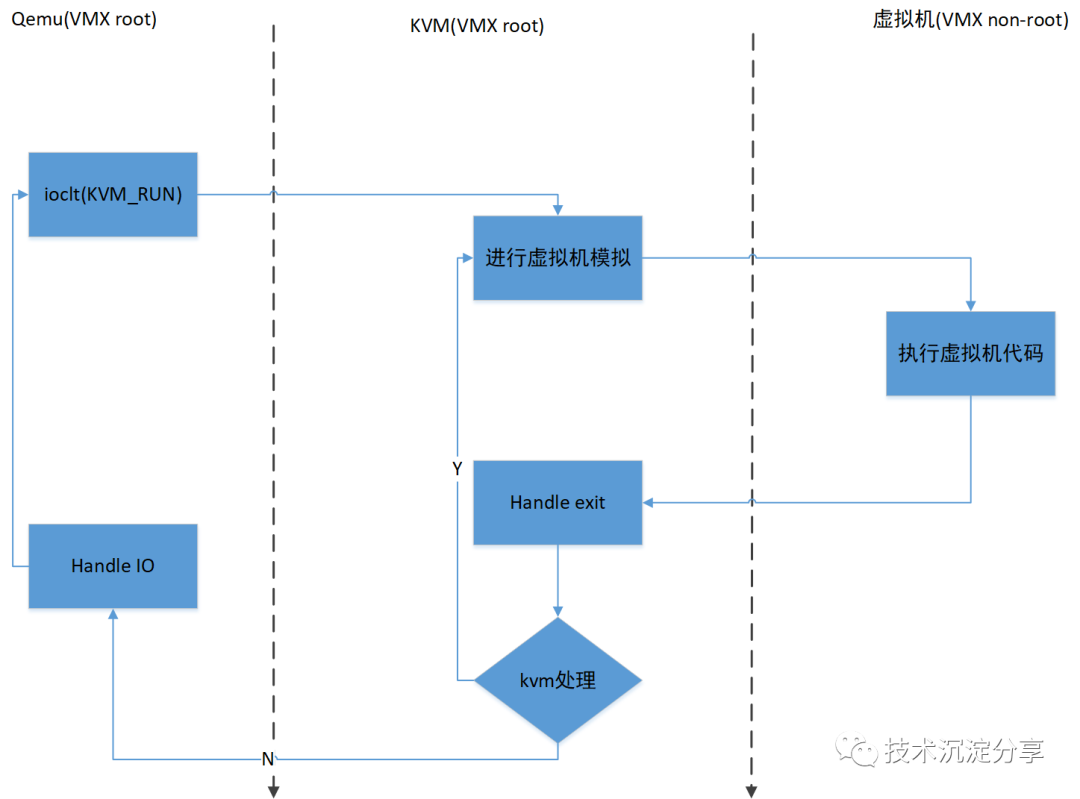

Linux 上的虚拟化工具 Qemu 和 KVM 的关系如图:

也有虚拟机使用本架构的二进制程序来模拟异构 CPU 的执行过程,详细可以看一下这篇文章:VM虚拟机技术简析 | Pwn进你的心 (ywhkkx.github.io)